WORKSHOP 3 SCHEDULE

Day 1

1030 : Coffee

1100 : Welcome & introduction - Alice Eldridge & Paul Stapleton

1115 : Algorithmic Listening Project Updates

Algorithms that Matter - Hanns Holger Rutz

Systemic Improvisation - Palle Dahlstedt

1300 : Lunch (Sussex Humanities Lab)

1400 : Oi, Algorithm! Chew On This! - Assaying the Noise Between Human and Algorithm

John Bowers & Owen Green

1500 : Coffee

1515 : Adaptive & Reflexive Musical Listening Algorithms

A sense of being ‘listened to’ - Tom Davis & Nick Ward

Self-listening for music generation - Ron Chrisley

1700 : End

1830 : Dinner at The Rose Hill

2030 : Long Table discussion on Humanising Algorithmic Listening in Culture and Conservation

2200 : Finish (latest)

Day 2

1030 : Coffee

1100 : Listening with Dynamical and Chaotic Systems

Marije Baalman & Chris Kiefer

1300 : Lunch (Sussex Humanities Lab)

1400 : Audio Feature Surfing

Introduction to CataRT - Diemo Schwarz

Getting to know big audio data - Alice Eldridge

Listening out for gender-based conversational dynamics - Ben Roberts

Feature Hacking - all

1630 : Coffee

1645 : Closing Panel - David Kant, Parag Mital, Simon Waters

1745 : End

… Dinner and drinks for those staying

SESSIONS

Day 1

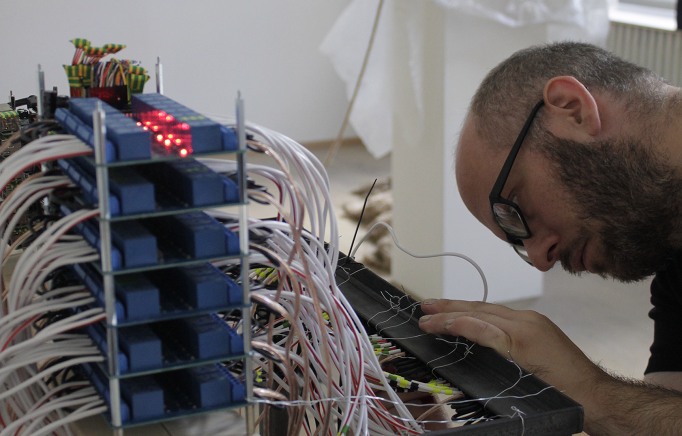

Hanns Holger Rutz

Algorithms that Matter

Algorithms that Matter (Almat) is a three-year FWF-funded artistic research project (AR 403) run by Hanns Holger Rutz and David Pirrò at the IEM Graz. It asks how algorithmic processes emerge and structure the praxis of experimental computer music. What we are interested in is to rethink algorithms as agents co-determining the boundary between an artistic machine or “apparatus” and the object produced through this machine. Unravelling the seemingly stable notion of algorithm, we look at the way an experimental culture in which the work with algorithms is embedded shapes our understanding of it, retroacts and changes the very praxis of composition and performance. Using as dispositifs two distinct software systems we have created, SoundProcesses and rattle, we implement a series of connected experiments, addressing research questions such as: What are the “units” of algorithms, in what way can they be de- and recomposed, what is the nature of their affordances and material traces, how can they be preserved and inform the methodology of artistic research? Special focus is put on the reconfiguration of elements, such as relaying a system to another artist/composer, and we work with a number of invited guest artists to explore the different algorithmic strategies.

https://almat.iem.at/

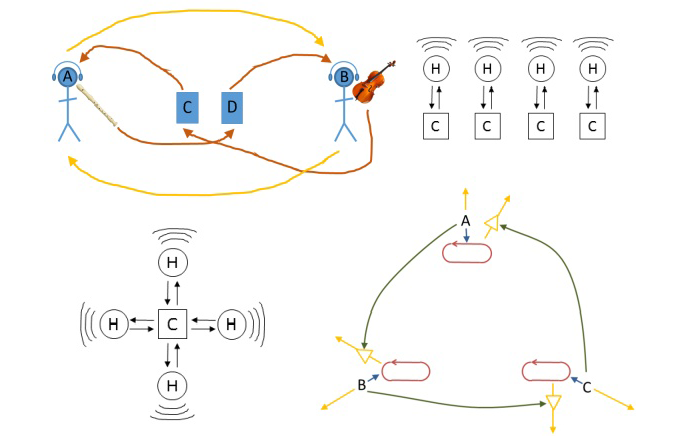

Palle Dahlstedt

Systemic Improvisation

Systemic Improvisation is a research project emerging from a series of musical works by Palle Dahlstedt and Per Anders Nilsson, exploring how computer-mediated interactions between improvisers can lead to new music. Here, an improvisation system is a configuration of human and virtual agents with communication going in both directions. Systemic improvisation is the act of a number of musicians playing in such a system. The virtual agents take input from human players, and produce visual or aural cues, which the human players react to. Together they form a complex interconnected system, with characteristic emergent behavior. Through design of (and playing on) a series of such systems, we study how musical output and musicians’ experiences depend on system configuration, cue type, time scale, and a number of other design parameters.

Oi, Algorithm! Chew On This! - Assaying the Noise Between Human and Algorithm

John Bowers & Owen Green

We take the position that what it is to be human and what it is to be algorithmic can be creatively considered as variable affairs, complexly intertwined, co-indexical and so constitute a domain of investigation which is labile, uncertain and profoundly noisy. We propose to map some of this territory through a series of design provocations and a large portfolio of small collaborative makes, which are assembled in performance or installation, and critically reflected upon in the light of the concerns of the Network.

We will give a short performance-lecture, seguing into discussion that details the impetus and goals for our collaboration.

Adaptive & Reflexive Musical Listening Algorithms

A sense of being ‘listened to’

Nicholas Ward & Tom Davis

How can a sense of being ‘listened to’ effect human-algorithm listening relationships? Drawing on ideas informed by the use of auditory cues in conversation, facial expression in interview techniques, and the sense of being stared at, Nick and Tom will present some things from their practice before probing the audience for insights.

Self-listening for music generation

Ron Chrisley

Although it may seem obvious that in order to create interesting music one must be capable of listening to music as music, the ability to listen is often omitted in the design of musical generative systems. And for those few systems that can listen, the emphasis is almost exclusively on listening to others, e.g., for the purposes of interactive improvisation. The project aims to explore the role that a system’s listening to, and evaluating, that system’s own musical performance (as its own musical performance) can play in musical generative systems. What kinds of aesthetic and creative possibilities are afforded by such a design? How does the role of self-listening change at different timescales? Can self-listening generative systems shed light on neglected aspects of human performance? A three-component architecture for answering questions such as these will be presented.

Day 2

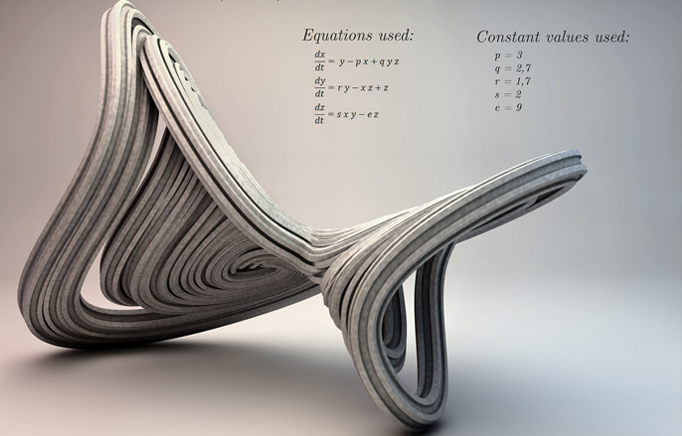

Listening with dynamical and chaotic systems

Marije Baalman & Chris Kiefer

What makes dynamical systems different from other approaches to algorithmic listening, and what are the best uses of these algorithms? We’ll explore dynamical systems hands-on, by doing some processing of live sensor data, and discuss their efficacy and applications.

Audio Feature Surfing

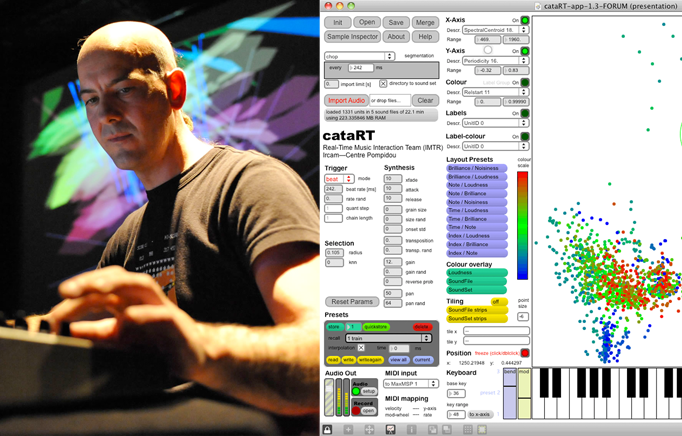

Introduction to CataRT

Diemo Schwarz The concatenative real-time sound synthesis system CataRT plays grains from a large corpus of segmented and descriptor-analysed sounds according to proximity to a target position in the descriptor space. This can be seen as a content-based extension to granular synthesis providing direct access to specific sound characteristics.

CataRT is implemented in MaxMSP and takes full advantage of the generalised data structures and arbitrary-rate sound processing facilities of the FTM and Gabor libraries. Segmentation and sound descriptors are loaded from text or SDIF files, or analysed on-the-fly.

CataRT allows to explore the corpus interactively or via a target sequencer, to resynthesise an audio file or live input with the source sounds, or to experiment with expressive speech synthesis and gestural control.

CataRT is explained in more detail in this article and is an interactive implementation of the new concept of Corpus-Based Concatenative Synthesis.

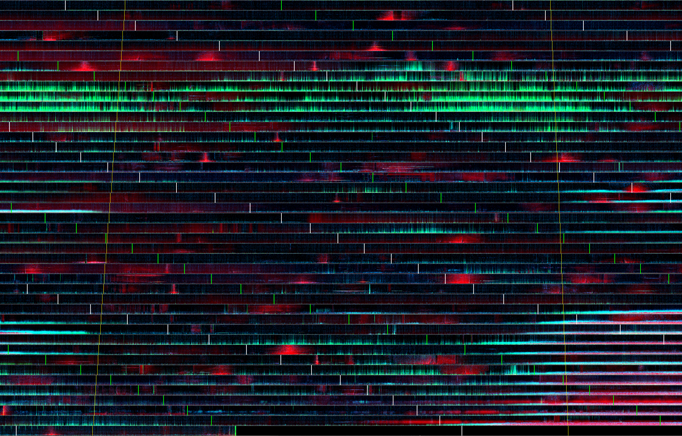

Getting to Know Big Audio Data – Alice Eldridge

When machine listening methods are used to address empirical questions – or even to look for general qualitative trends – we necessarily need to work with audio data sets which exceed listenable compass. We can’t listen to it all, and this can hamper interpretation of subsequent models. I will present some existing research in the visualisation of long form audio recordings and invite participants to brain-storm /hack around with ideas for perceptualisation of audio features which might afford a rapid, deep listening for large audio databases.